The Pareto principle says if you solve a problem 20% through, you get 80% of the value. The opposite seems to be true for generative AI.

About the author: Zsombor Varnagy-Toth is a Sr UX Researcher at SAP with background in machine learning and cognitive science. Working with qualitative and quantitative data for product development.

I first realized this as I studied professionals writing marketing copy using LLMs. I observed that when these professionals start using LLMs, their enthusiasm quickly fades away, and most return to their old way of manually writing content.

This was an utterly surprising research finding because these professionals acknowledged that the AI-generated content was not bad. In fact, they found it unexpectedly good, say 80% good. But if that’s so, why do they still fall back on creating the content manually? Why not take the 80% good AI-generated content and just add that last 20% manually?

Here is the intuitive explanation:

If you have a mediocre poem, you can’t just turn it into a great poem by replacing a few words here and there.

Say, you have a house that is 80% well built. It’s more or less OK, but the walls are not straight, and the foundations are weak. You can’t fix that with some additional work. You have to tear it down and start building it from the ground up.

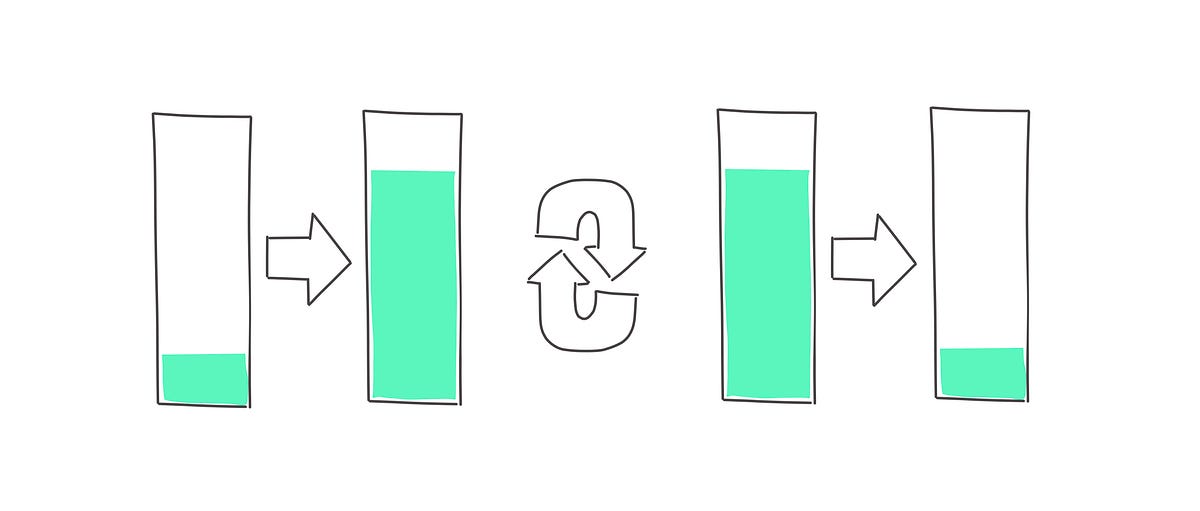

We investigated this phenomenon further and identified its root. For these marketing professionals if a piece of copy is only 80% good, there is no individual piece in the text they could swap that would make it 100%. For that, the whole copy needs to be reworked, paragraph by paragraph, sentence by sentence. Thus, going from AI’s 80% to 100% takes almost as much effort as going from 0% to 100% manually.

Now, this has an interesting implication. For such tasks, the value of LLMs is “all or nothing.” It either does an excellent job or it’s useless. There is nothing in between.

We looked at a few different types of user tasks and figured that this reverse Pareto principle affects a specific class of tasks.

- Not easily decomposable and

- Large task size and

- 100% quality is expected

If one of these conditions are not met, the reverse Pareto effect doesn’t apply.

Writing code, for example, is more composable than writing prose. Code has its individual parts: commands and functions that can be singled out and fixed independently. If AI takes the code to 80%, it really only takes about 20% extra effort to get to the 100% result.

As for the task size, LLMs have great utility in writing short copy, such as social posts. The LLM-generated short content is still “all or nothing” — it’s either good or worthless. However, because of the brevity of these pieces of copy, one can generate ten at a time and spot the best one in seconds. In other words, users don’t need to tackle the 80% to 100% problem — they just pick the variant that came out 100% in the first place.

As for quality, there are those use cases when professional grade quality is not a requirement. For example, a content factory may be satisfied with 80% quality articles.

If you are building an LLM-powered product that deals with large tasks that are hard to decompose but the user is expected to produce 100% quality, you must build something around the LLM that turns its 80% performance into 100%. It can be a sophisticated prompting approach on the backend, an additional fine-tuned layer, or a cognitive architecture of various tools and agents that work together to iron out the output. Whatever this wrapper does, that’s what gives 80% of the customer value. That’s where the treasure is buried, the LLM only contributes 20%.

This conclusion is in line with Sequoia Capital’s Sonya Huang’s and Pat Grady’s assertion that the next wave of value in the AI space will be created by these “last-mile application providers” — the wrapper companies that figure out how to jump that last mile that creates 80% of the value.