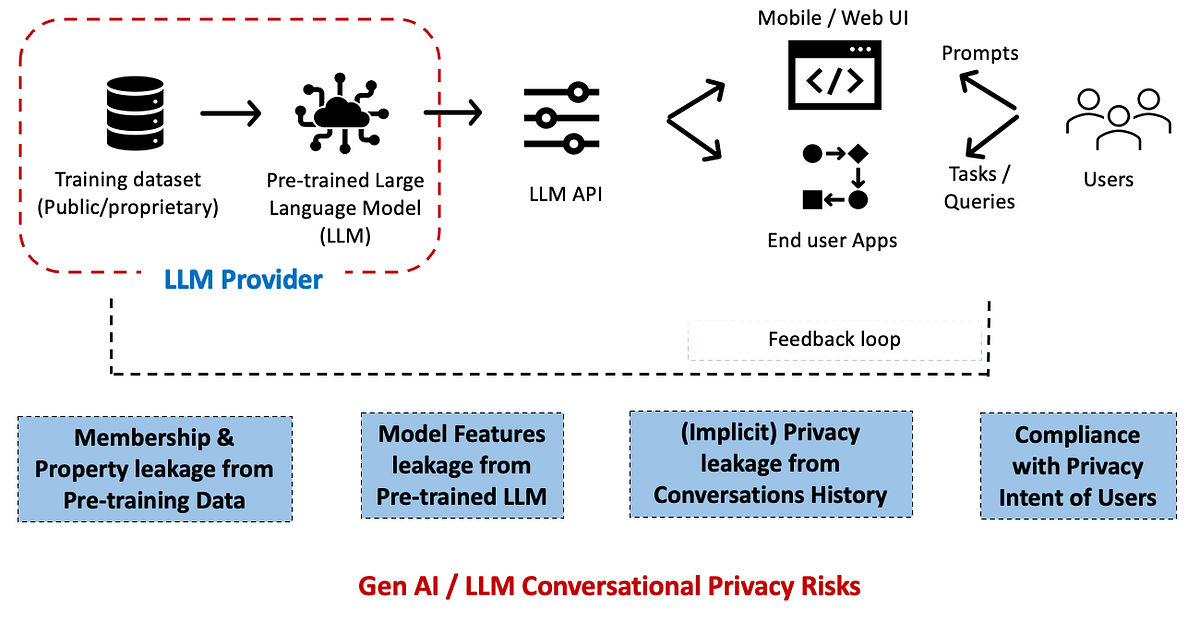

In this article, we focus on the privacy risks of large language models (LLMs), with respect to their scaled deployment in enterprises.

We also see a growing (and worrisome) trend where enterprises are applying the privacy frameworks and controls that they had designed for their data science / predictive analytics pipelines — as-is to Gen AI / LLM use-cases.

This is clearly inefficient (and risky) and we need to adapt the enterprise privacy frameworks, checklists and tooling — to take into account the novel and differentiating privacy aspects of LLMs.

Let us first consider the privacy attack scenarios in a traditional supervised ML context [1, 2]. This consists of the majority of AI/ML world today with mostly machine learning (ML) / deep learning (DL) models developed with the goal of solving a prediction or classification task.

There are mainly two broad categories of inference attacks: membership inference and property…